Mathematical Analysis

Leture 16

The logarithm and the exponential

We now have the tools to properly define the exponential and the logarithm that you know so well. We start with exponentiation.

If $n$ is a positive integer, we define \begin{equation*} x^n := \underbrace{x \cdot x \cdot \cdots \cdot x}_{n \text{ times}} . \end{equation*}

The logarithm and the exponential

We also define $x^0:=1$. For negative integers,

$x^{-n} := \dfrac{1}{x^n}.$

If $x > 0,$ define

$x^{1/n}$ as

the unique positive $n$th root.

Finally, for a rational

number $\dfrac{n}{m}$ (in lowest terms),

define

$ x^{n/m} := {\bigl(x^{1/m}\bigr)}^n . $

The logarithm and the exponential

Now, what do we mean by $\sqrt{3}^{\sqrt{5}}$? 🤔

Or $x^y$ in general?

In particular, what is $e^x$ for all $x$? And how do we solve $y=e^x$ for $x$?

Here we are going to answer these questions.

8.3 The logarithm

Theorem 8.3.1. There exists a unique function $L \colon (0,\infty) \to \R$ such that

- $L(1) = 0$.

- $L$ is differentiable and $L'(x) = \dfrac{1}{x}$.

- $L(xy) = L(x)+L(y)$ for all $x,y \in (0,\infty)$.

8.3 The logarithm

Proof. To show the existence, we define a good candidate and show it satisfies all the properties. For example, let \begin{equation*} L(x) := \int_1^x \frac{1}{t}~dt . \end{equation*} Using this function, we can easily see that (1) holds,

since $\;L(1)$ $=\ds \int_1^1\frac{1}{t} ~dt$ $=0.$

8.3 The logarithm

Proof. Now, notice that property (2) holds by the second form of the fundamental theorem of calculus.

The function $f(t)= \dfrac{1}{t}$ is continuous on $(0,\infty)$, then $L$ is differentiable and \[ L'(x) = \frac{1}{x}. \]

This also implies that $L$ is continous.

8.3 The logarithm

Proof. To prove property (3), we just apply a change of variables $u=yt$ to obtain

$L(x) = \displaystyle \int_1^{x} \frac{1}{t}~dt$ $= \displaystyle \int_y^{xy} \frac{1}{u}~du $

$\qquad = \displaystyle \int_1^{xy} \frac{1}{u}~du - \int_1^{y} \frac{1}{u}~du $

$\displaystyle= L(xy)-L(y) . $

8.3 The logarithm

Proof. Finally we will prove that is unique using properties (1) and (2). Using the first form of the fundamental theorem of calculus, \begin{equation*} L(x) = \int_1^x \frac{1}{t}~dt \end{equation*} is the unique function such that

$\ds L(1) = 0 \,\;\text{ and }\,\; \ds L'(x) = \frac{1}{x}.$ $\; \bs$

8.3 The logarithm

$L \colon (0,\infty) \to \R$

8.3 The logarithm

More properties of $L \colon (0,\infty) \to \R$

- $L$ is strictly increasing,

- $L$ is bijective,

- $\displaystyle \lim_{x\to 0} L(x) = -\infty, \;$ and $\;\displaystyle \lim_{x\to \infty} L(x) = \infty .$

8.3 The logarithm

Now that we proved

that there is a unique function with these properties,

we simply define the

8.4 The exponential

Theorem 8.4.1. There exists a unique function $E \colon \R \to (0,\infty)$ such that

- $E(0) = 1$.

- $E$ is differentiable and $E'(x) = E(x)$.

- $E(x+y) = E(x)E(y)$ for all $x,y \in \R$.

8.4 The exponential

Proof. As we did before for $\ln x$, we prove existence of such a function by defining a candidate and proving that it satisfies all the properties.

The $L(x) = \ln (x)$ defined previously is invertible. Let $E$ be the inverse function of $L$. Property (1) is immediate.

8.4 The exponential

Property (2) follows via the inverse function theorem. The function $L$ satisfies all the hypotheses of this theorem, and hence

$ E'(x) $ $= \dfrac{1}{L'\bigl(E(x)\bigr)} $ $= E(x) . $

8.4 The exponential

Proof.

To prove property (3),

we use the corresponding

property for the logarithm.

Take $x, y \in \R$.

As $L$ is bijective, find $a$ and $b$ such that $x = L(a)$ and $y = L(b)$.

Then

$ E(x+y) $ $ = E\bigl(L(a)+L(b)\bigr) $ $ = E\bigl(L(ab)\bigr) $

$ = ab $ $ = E(x)E(y) .\quad \,\; $

8.4 The exponential

Proof. Uniqueness follows from (1) and (2). Let $E$ and $F$ be two functions satisfying (1) and (2),

$ \displaystyle\frac{d}{dx} \Bigl( F(x)E(-x) \Bigr) = F'(x)E(-x) - E'(-x)F(x) $ $ \qquad\qquad\qquad = F(x)E(-x) - E(-x)F(x) $

$ =0 .\qquad\;\, $

This means that $F(x)E(-x)$ is constant by Theorem 6.3.1.

Thus $F(x)E(-x) $ $= F(0)E(-0) $ $ = 1$ for all $x \in \R$.

8.4 The exponential

Proof. Thus $F(x)E(-x) $ $ = 1$ for all $x \in \R$. Next,

$1 = E(0)$ $= E(x-x) $ $ =E(x) E(-x)$.

Then

$ 0 = 1-1 $ $ =F(x)E(-x) - E(x)E(-x) $

$ \qquad \;\;= \bigl(F(x)-E(x)\bigr) E(-x) . $

Finally, $E(-x) \not= 0$ for all $x \in \R$. So $F(x)-E(x) = 0$ for all $x$, and this completes the proof. $\blacksquare$

8.3 The logarithm

$E \colon \R \to (0,\infty) $

8.3 The logarithm

More properties of $E \colon \R \to (0,\infty) $

- $E$ is strictly increasing,

- $E$ is bijective,

- $\displaystyle \lim_{x\to -\infty} E(x) = 0 ,\;$ and $\;\displaystyle \lim_{x\to \infty} E(x) = \infty .$

8.4 The exponential

Now we can define the

If $y \in \Q$ and $x > 0$, then \begin{equation*} x^y = \exp\bigl(\ln(x^y)\bigr) = \exp\bigl(y\ln(x)\bigr) . \end{equation*}

We can now make sense of exponentiation $x^y$ for arbitrary $y \in \R$; if $x > 0$ and $y$ is irrational, define \begin{equation*} x^y := \exp\bigl(y\ln(x)\bigr) . \end{equation*}

8.4 The exponential

Define the number $e$ as: \begin{equation*} e := \exp(1) . \end{equation*} This is known as Euler's number or the base of the natural logarithm.

The notation $e^x$ for $\exp(x)$: \begin{equation*} e^x = \exp\bigl(x \ln(e) \bigr) = \exp(x) . \end{equation*}

8.4 The exponential

Theorem 8.4.2. Let $x, y \in \R$.

- $\exp(xy) = {\bigl(\exp(x)\bigr)}^y$.

- If $x > 0$, then $\ln(x^y) = y \ln (x)$.

Why do we need logarithms anyway?

Why do we need logarithms anyway?

Why do we need logarithms anyway?

Imagine we are living in 1823 and we need to compute \[ x=\sqrt[3]{\frac{493.8\times \left(23.67\right)^2}{5.104}}. \]

😬 ❌🖥️

Why did you need to make such calculation?

🧭 🗺️ 🌌 🔭

Why do we need logarithms anyway?

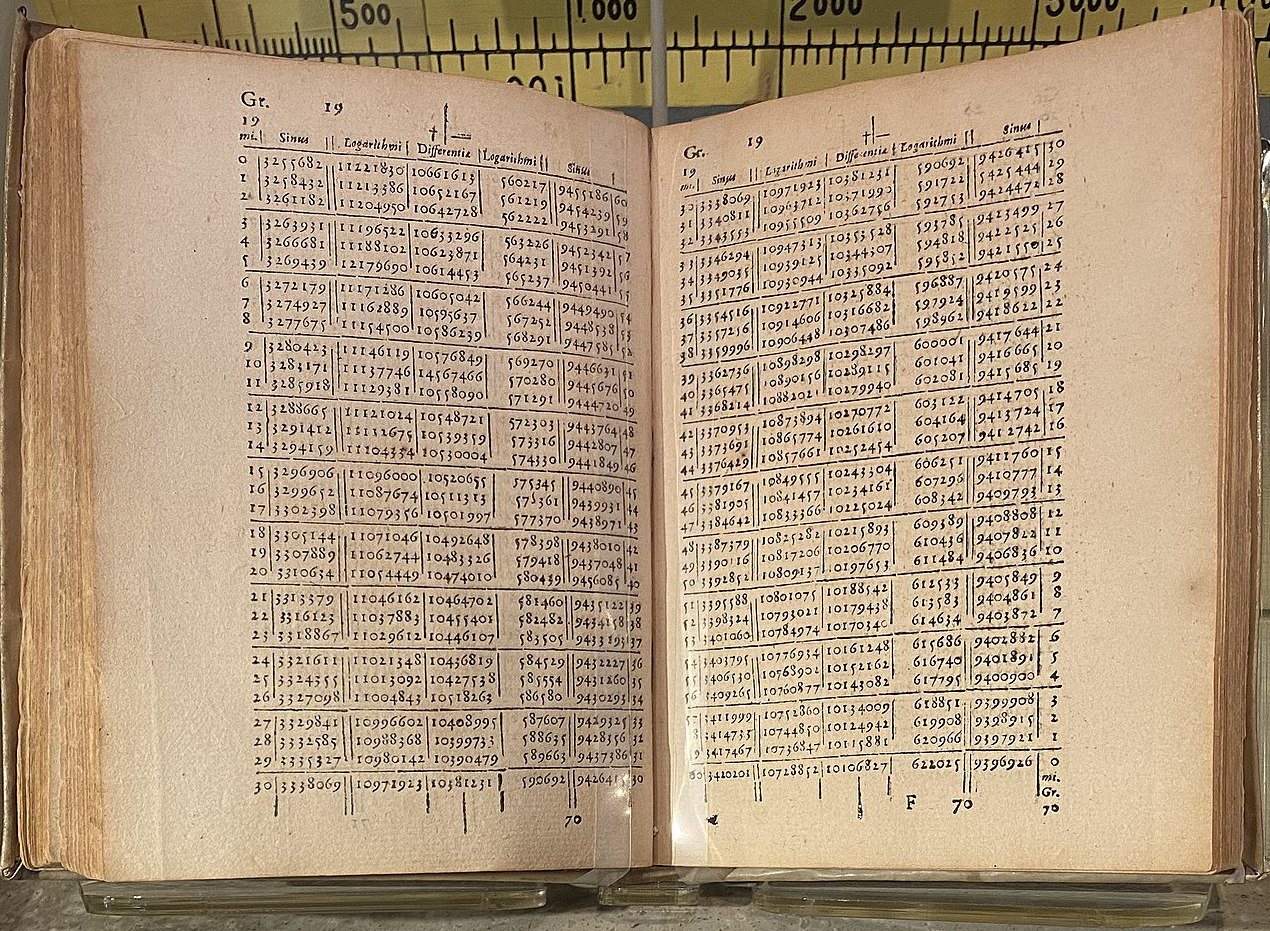

Imagine we are living in 1823 and we need to compute $ \ds x=\sqrt[3]{\frac{493.8\times \left(23.67\right)^2}{5.104}}. $

Why do we need logarithms anyway?

Imagine we are living in 1823 and we need to compute $ \ds x=\sqrt[3]{\frac{493.8\times \left(23.67\right)^2}{5.104}}. $

We can write $\,\ds x=\left(\frac{493.8\times \left(23.67\right)^2}{5.104}\right)^{1/3}.$

Using the properties of the logarithms, we have

$ \ds \log x=\frac{1}{3}\bigg(\log (493.8)+2\log (23.67)-\log (5.104)\bigg). $

Then we find these values using the logarithmic tables 📖.

👉 $\;x\approx 37.84$

Why do we need logarithms anyway?

Imagine we are living in 1823 and we need to compute $ \ds x = \sqrt{\frac{493.8\times (23.67)^2}{5.104}} $

👉 $\;x\approx 37.84$

Why do we need logarithms anyway?

Logarithms exists thanks to John Napier and Jost Bürgi who discovered independently at the beginning of the XVII century.

But they did not know about the relationship between the exponential function ($e^x$ where $e$ is the Euler's constant) and the natural logarithmic function ($\ln x$, i.e., logartihm with base $e$).

This relationship was discovered later (circa 1647) by Grégoire de Saint-Vincent who was trying to compute the area under the square parabola $1/x.$

Why do we need logarithms anyway?

This relationship was discovered later (circa 1647) by Grégoire de Saint-Vincent who was trying to compute the area under the square parabola $1/x.$

Area under $1/x$ $ = \ds\int_1^x \dfrac{1}{t}dt$ $ = \ln x.$

How cool is that? 😃

References

- Merzbach, U. C. & Boyer, C. B. (2011). A History of Mathematics. 3rd. ed. USA: John Wiley & Sons.

- Maor, E. (1994). e the story of a number. USA: Princeton University Press.

- Napier J. (1614) Mirifici logarithmorum canonis descriptio. 🔗 Latin - English.

- Napier, J. (1619) Mirifici logarithmorum canonis constructio. 🔗 Latin - English.

Why do we need logarithms anyway?

Since nothing is more tedious, fellow mathematicians, in the practice of the mathematical arts, than the great delays suffered in the tedium of lengthy multiplications and divisions, the finding of ratios, and in the extraction of square and cube roots- and in which not only is there the time delay to be considered, but also the annoyance of the many slippery errors that can arise.

John Napier (1614)